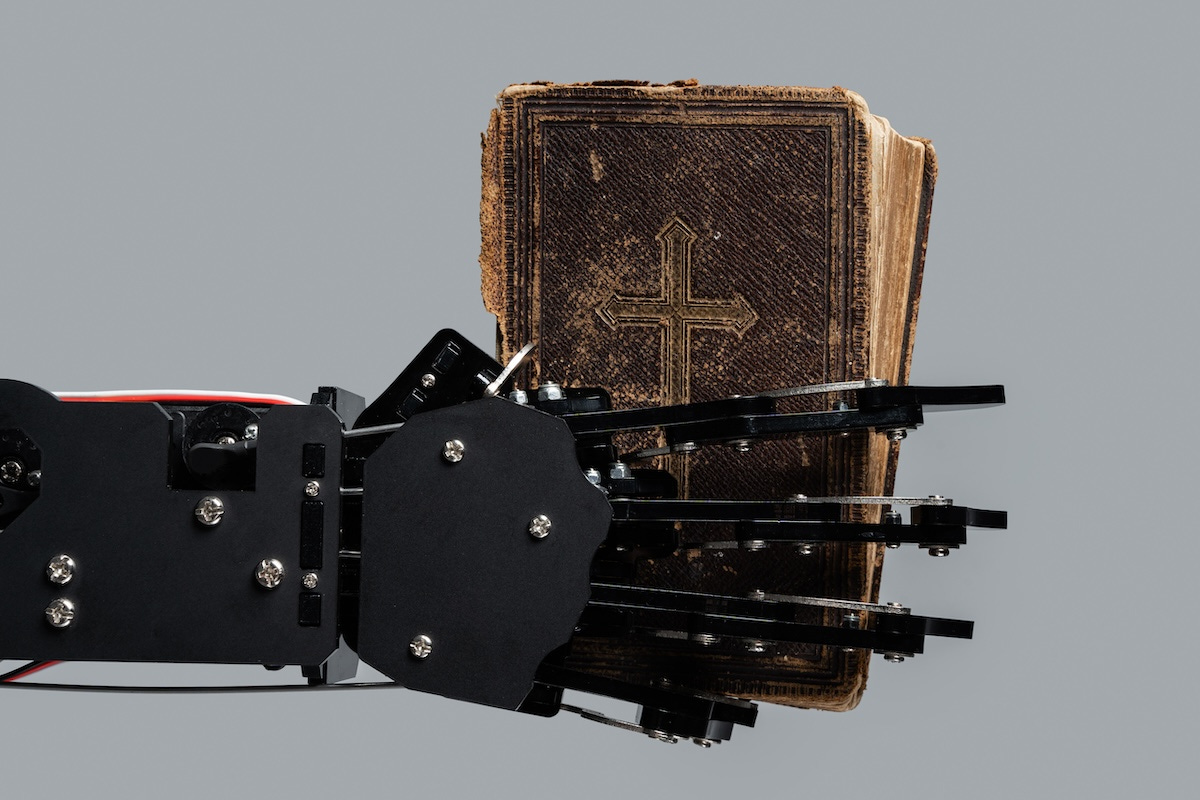

Should AI recommend God?

AI-driven psychosis reminds us that human values are core to engineering

AI had a small retrenchment this week. Nasdaq and the Mag 7 are taking a bit of a step back from their breathtaking highs, and journalists have been flogging lurid news around AI-induced psychosis after getting tired of talking about American Eagle jeans (we can’t all get invited to the Euro Championship in the Oval Office, I suppose, although I do think Zelensky wearing American Eagle would have ignited the final conflagration of the American press).

In other words, it’s the waning days of summer. We are now reaching into the quiver for any morsel of amusement outside our quotidian experience. So naturally, I found myself at a conservative luncheon in midtown recently on the subject of AI, God and the future of faith. The burger and fries were my normal fare; the conversation was not.

At issue was a simple observation. Pop open ChatGPT or Claude, and ask for advice. It’ll be a doctor, a therapist, a business consultant or a lawyer on demand. What it will never be — or not without very intentional prompting — is a priest. The models are secular, and they will never direct a user toward any scripture or catechism without a determined paddle of the prompting raft against the unbelieving current. Should that change?

After all, that blank text box and the worlds it can unfurl are powerful; so powerful in fact that the large model companies employ whole alignment teams to determine whether their products are serving the interests of users and humanity as a whole. What one rarely hears from this community though is a connection between the secular binaries of our H100 overlords and the religious convictions of billions of humans. That fact is particularly striking given the overwhelming largess of the Middle East in propelling AI forward.

Even so, I am a hardened skeptic and was not convinced in the initial volleys of the luncheon. AI alignment experts — like the social media moderation teams combatting misinformation and disinformation before them — seem like nothing more than sanctified high priests of censorship hell-bent on identifying and purging thoughtcrime, far beyond the confines of jurisprudence. (No, I don’t think that alignment will solve bioweapons; it’s far more likely to entrap some poor biomed student just trying to check their homework).

I digress. As the luncheon went on, the debate turned to what degree should AI models serve the user and their psychologies (aka sycophancy) versus nudging them toward “human flourishing" in the broadest conception. Instead of agreeing and reinforcing a user’s innate tendencies, should AI models work in a more reparative role? Should they have some vision of the good life and encourage actions in that direction? Or to put it another way, should they adopt the tone of Reddit’s most charming and arguably popular applied ethical subreddit, “Am I The Asshole.”

In short, should AI act as one imagines a religious mentor might, offering words of solace at certain moments, hope in others, and sometimes even admonishments and serious censures? Anthropic announced this week that it has now designed Claude to shut down conversations it determines to be "harmful or abusive.” Why only take such small steps?

Should AI act as one imagines a religious mentor might, offering words of solace at certain moments, hope in others, and sometimes even admonishments and serious censures?

The human flourishing debate went on for a while, but what I found striking about the luncheon topic was its complete absence from the Silicon Valley dialogue. Sociologically, the AI alignment world is deeply intertwined with the broader rationalist community represented by LessWrong, the blog originally created by the AI researcher Eliezer Yudkowsky, alongside other digital and analog homes. The community (as far as I can observe as a non-member) has very little interaction with religion, outside its penchant for generating some of the strangest cults we’ve ever seen. How many practicing Catholics are on AI safety teams in the archipelago of San Francisco hacker houses? Even with Neo-Catholic churches seemingly on the rise, I’d estimate vegans outnumber the Pope’s flock in the city named for Saint Francis of Assisi.

Yet traditional religions are hardly disengaged. Take the Catholic Church, which published its comprehensive opinion on artificial intelligence earlier this year. It’s a lengthy read, but I enjoyed one of its central arguments (paragraph 33):

Human intelligence is not primarily about completing functional tasks but about understanding and actively engaging with reality in all its dimensions; it is also capable of surprising insights. Since AI lacks the richness of corporeality, relationality, and the openness of the human heart to truth and goodness, its capacities—though seemingly limitless—are incomparable with the human ability to grasp reality. So much can be learned from an illness, an embrace of reconciliation, and even a simple sunset; indeed, many experiences we have as humans open new horizons and offer the possibility of attaining new wisdom. No device, working solely with data, can measure up to these and countless other experiences present in our lives.

For all of the obsession in AI alignment circles about bioweapons and existential risk (reminder: we’re never going to stop bioweapons — ever), how often do we consider how little wisdom AI actually has? It’s never experienced a simple sunset, let alone had a crush that got away. Sure, use it to read an X-ray or a legal document, or ask it to structure a code refactor. But such “functional tasks” are not what make humanity great (and if they are, then please make autonomous killer robots sooner rather than later).

As the luncheon drifted toward its conclusion, I simply observed that the Valley alignment conversation centers almost exclusively on preventing harm. Let AI move forward unchecked except for the things that can cause lasting damage. The inverse — of empowering human flourishing — is a far more hopeful vision. Don’t try to detect psychosis or push users to an AI boyfriend or girlfriend, but instead, help users find flesh-and-blood love since that can never be substituted by pixel-and-byte prompting.

The AI alignment community needs to diversify the voices it takes as input, and quickly. It shouldn’t mimetically follow its own thought patterns, lest it fall into the same trap as the social media moderators who forgot to truly protect online conversation in their quest for domineering control. Alignment is ultimately another word for value systems, and the world has many. Silicon Valley should cultivate its humility on these issues and absorb a far wider set of them in its pursuit of ultimate utility.

Our valuations are retrenching; it’s time our values did as well.

I didn’t leave the table agreeing with the group (nor did I leave having eaten dessert or drinking a sherry, with conservatives just as puritanical as progressives, apparently). Alignment — like tech ethics in general — is a topic that always seems to find itself in a perpetual motion machine of points and counterpoints, with little to show for all of the philosophical energy. We’ve covered it once before in Laurence’s post a few weeks ago on the “Cigarette Butler Problem.” What can I say? He had words, I didn’t; I was drinking a nice Chardonnay, and he has access to the publish button. C’est la vie en été.

I did leave lunch thinking that Silicon Valley has a much greater duty to be less impoverished in the import of its products. AI chatbots are the fastest growing technology in the history of humanity. Upward of a billion people already use these tools regularly, and that figure could hit two billion soon. That’s nothing short of astonishing, and shows the profound intellectual achievement of these technologies and the engineers building them.

That influence demands that questions of values should range far larger and come from a more humble perspective than what we’ve witnessed the past few years. Silicon Valley tends to glom onto a single school of thought rather than a syncretism encompassing the whole range of human understanding and history (the challenge of so many engineers deficient in a humanities education!). That mattered less in the cloud, mobile and SaaS era, but it’s critical to the age of intelligence. Our valuations are retrenching; it’s time our values did as well.